Graph Convolution Network (GCN) and Graph Attention Network (GAT)

- Introduction to GCN

- GraphSAGE

- Defferrard, Michaël, Xavier Bresson, and Pierre Vandergheynst. "Convolutional neural networks on graphs with fast localized spectral filtering." Advances in neural information processing systems 29 (2016).

- Kipf, Thomas N., and Max Welling. "Semi-supervised classification with graph convolutional networks." International Conference on Learning Representations (2017).

- Hamilton, Will, Zhitao Ying, and Jure Leskovec. "Inductive representation learning on large graphs." Advances in neural information processing systems 30 (2017).

- Wu, Felix, Amauri Souza, Tianyi Zhang, Christopher Fifty, Tao Yu, and Kilian Weinberger. "Simplifying graph convolutional networks." In International conference on machine learning, pp. 6861-6871. PMLR, 2019.

- Veličković, Petar, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Lio, and Yoshua Bengio. "Graph attention networks." International Conference on Learning Representations (2018).

1. Laplacian Matrix on Graph

The Laplacian matrix of a -vertex graph is defined as where is the adjacent matrix with weights and is the degree matrix. The normalized Laplacian matrix is defined as The Laplace operator is defined as In -dimensional space, we have Similarly, if we consider a -vertex graph and a function on it, where is the function value of the -th vertex in the graph. Then the Laplacian operation on this function on graph should be In a vector form, we can recover the definition of Laplacian matrix on graph , For an undirected graph, the Laplacian matrix is a real symmetric matrix and therefore we have the spectral decomposition There are also some properties for Laplacian matrix ,

- There is at least one eigenvalue 0.

- All the eigenvalues are non-negative. For normalized Laplacian matrix, all the eigenvalues are between 0 and 2, and the summation is .

- If there are eigenvalues with value 0, then there are mutually unconnected sub-graphs within the graph.

2. Fourier Transformer

Recall Fourier transformer of function , where is a set of orthogonal basis. We also have the inverse Fourier transformer,

For the basis function , notice that it is the eigenfunction for Laplacian operator , Similarly, the eigenvectors of graph Laplacian matrix should also be the basis for the Fourier transformer on the graph , i.e., Therefore, Similarly, we have the inverse Fourier transformer on graph ,

3. Convolution on Graphs

Denote the continuous convolution for two functions , as well as the discrete convolution, Recall the property for convolution and Fourier transformer, Therefore, For convolution operations on graph , similarly, we have If we parameterize directly by trainable parameters , This is the convolution filter on graphs.

4. Fast Localized Spectral Filtering

Recall that in the above graph convolution, is a vector depending on the eigenvalues of the Laplacian matrix , we can rewrite it as and the graph convolution is then Notice that it can also be seen as a signal filtered by as, The parameterization in the previous section can be seen as a non-parametric filter, i.e., There are however two limitations with non-parametric filters:

- they are not localized in space

- their learning complexity is in , the dimensionality of the graph.

These issues can be overcome with the use of a polynomial filter, Under the polynomial filter, the convolution is given by Notice that implies , where is the shortest path distance between and . Consequently, spectral filters represented by order polynomials of the Laplacian matrix are exactly -localized. Besides, their learning complexity is , the support size of the filter, and thus the same complexity as classical CNNs.

However, the matrix computation of matrix part still involves the computation complexity. Recall that the Chebyshev polynomial of order may be computed by the stable recurrence relation with and . These polynomials form an orthogonal basis for , the Hilbert space of square integrable functions with respect to the measure . A filter can thus be parametrized as the truncated expansion, where is the normalized diagonal matrix with scaled eigenvalues that lie in . The convolution operation is therefore, Here . Denoting , we can use the recurrence relation to compute with and . The entire filtering operation then costs operations, where is the number of edges in the graph .

5. Fast Approximate Convolutions on Graphs

Recall that in the previous section, we have the convolution operation on vector of a graph using Chebyshev polynomials, If we consider the simple case of and consider the normalized Laplacian matrix , and approximate , which is the eigenvalue upper bound for , we have In practice, it can be beneficial to constrain the number of parameters further to address overfitting and to minimize the number of operations (such as matrix multiplications) per layer. This leaves us with the following expression using , Note that now has eigenvalues in the range . Repeated application of this operator can therefore lead to numerical instabilities and exploding/vanishing gradients when used in a deep neural network model.

To alleviate this problem, we introduce the following renormalization trick: with and .

We can generalize this definition to a signal with input channels (i.e. a -dimensional feature vector for every node) and filters or feature maps as follows, where is now a matrix of filter parameters and is the convolved signal matrix. This filtering operation has complexity , as can be efficiently implemented as a product of a sparse matrix with a dense matrix.

6. Simple GCN

From Kipf and Welling (2017) above, for a input with input channels (i.e. a -dimensional feature vector for every node) and filters or feature maps as follows, where is now a matrix of filter parameters, is the non-liner activation function and is the convolved signal matrix. For a -depth network, we will repeat the above procedure times.

This paper hypothesizes that the nonlinearity between GCN layers is not critical - but that the majority of the benefit arises from the local averaging. The resulting model is linear, but still has the same increased “receptive field” of a -layer GCN, i.e., Under this network, the authors obtain a comparable experiment results with the previous structure, and the computation cost / the number of parameters is significantly reduced. Notice that is a -sparse matrix, where is the number of edges in the graph , and the exponential computation for sparse matrix is quite fast.

7. GraphSAGE

- Hamilton, Will, Zhitao Ying, and Jure Leskovec. "Inductive representation learning on large graphs." Advances in neural information processing systems 30 (2017).

- GraphSAGE

We now consider the graph convolution from a different aspect. We mimic the logic of convolution on graph: for a 3x3 convolution layer, we conduct weighted average of the value of the 1-step neighbors of the center point. The next layer convolution operation increases the "receptive field" to the 2-step neighbors of the center point.

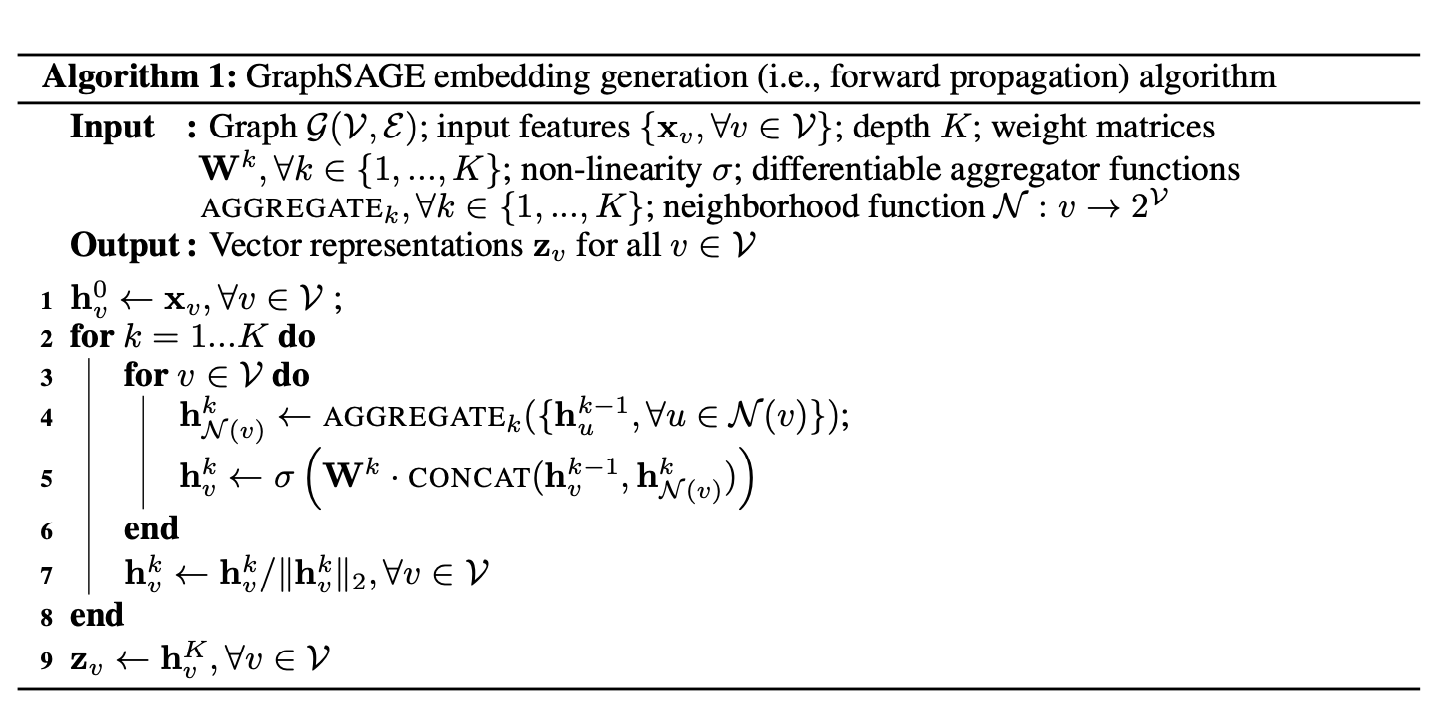

The algorithm is given below. For each step , we first gather and aggregate all the hidden vecotr from previous step in the neightbourhood of vertex , i.e., . Then in line 5, we combine the neighborhood information vector with of .

This process has been repeated times and the information from all the -step neighbor will be included. Instead of training a distinct embedding vector for each node, this algorithm train a set of aggregator functions that learn to aggregate feature information from a node’s local neighborhood.

There are several choices of aggregator function:

Mean aggregator: which is the average of all the .

Pooling aggregator: each neighbor’s vector is independently fed through a fully-connected neural network; following this transformation, an elementwise max-pooling operation is applied to aggregate information across the neighbor set:

8. Graph Attention Network

The input to our layer is a set of node features, , where is the number of nodes, and is the number of features in each node. The layer produces a new set of node features (of potentially different cardinality ), , as its output.

Consider a self-attention mechanism on the nodes where is the weight matrix and is the attention mechanism .

Consider the masked attention softmax, which normalize across all the neighbors of node , We consider the attention mechanism as a single-layer feed-forward neural network, parametrized by a weight vector , and applying the LeakyReLU non-linearity, where is the concatenation operation. Therefore, the one-layer graph attention is computed as follows, Utilizing the multi-head attention mechanism, we can extend the network structure to where represents concatenation, are normalized attention coefficients computed by the -th attention mechanism , and is the corresponding input linear transformation's weight matrix. Note that, in this setting, the final returned output, .